Watch: How to build a modular, production-grade GenAI chatbot using NVIDIA NIM + MLRun

In this end-to-end demo, you’ll learn how to create a multi-agent banking chatbot using NVIDIA NIM, LangChain, and MLRun. The demo walks through:

- Deploying an LLM with a production-first mindset using MLRun’s serverless application runtime

- Building an intent classification system that routes user queries to the right agent (loans, investments, general banking)

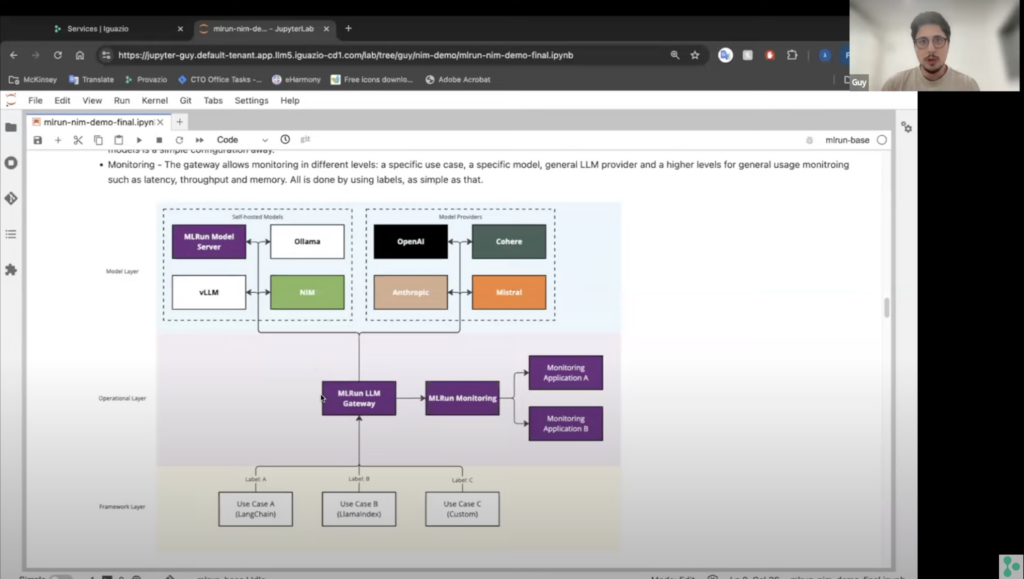

- Operationalizing and monitoring LLMs with MLRun’s built-in LLM gateway—enabling cost optimization, versioning, and use-case-level observability

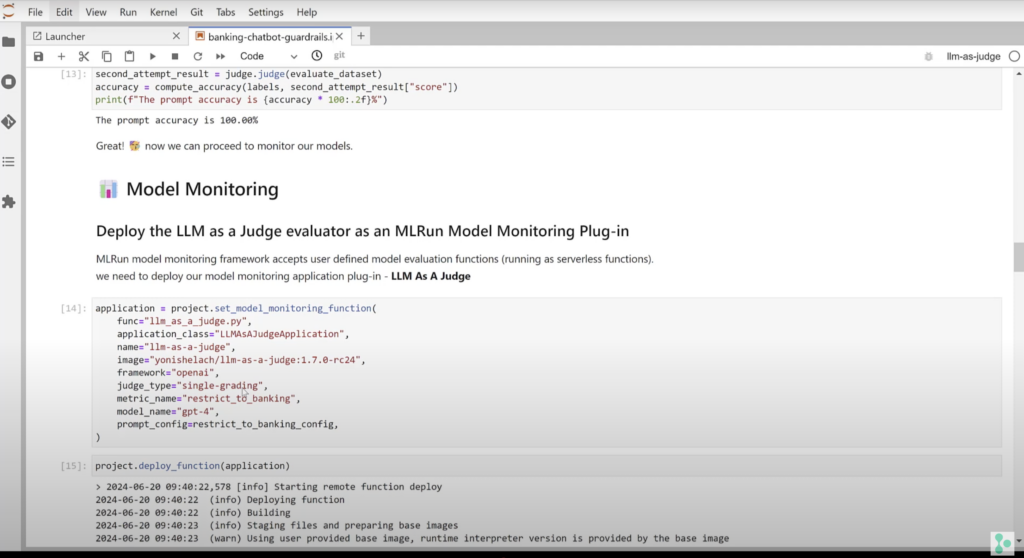

- Using LLM-as-a-judge to automatically evaluate model quality

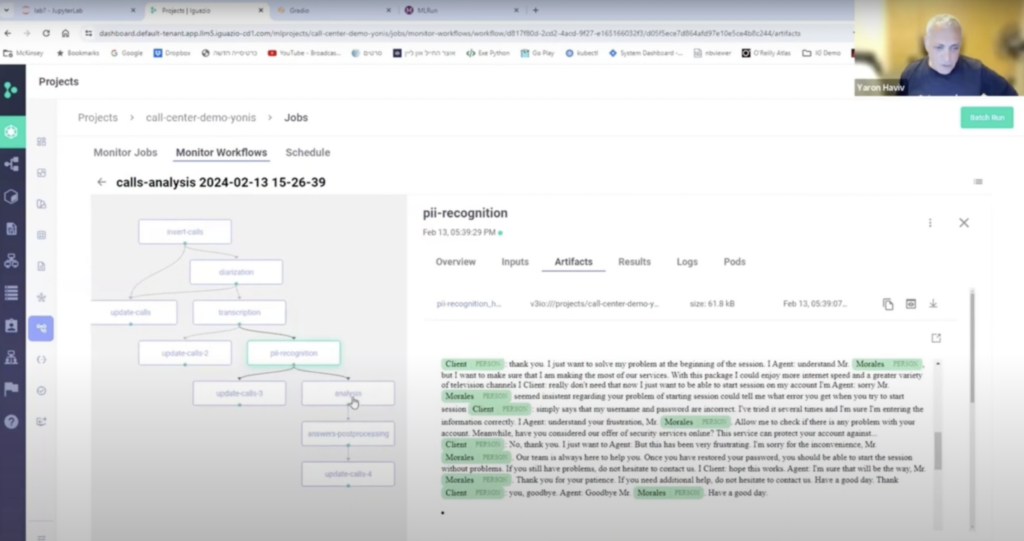

- Wrapping the entire pipeline into a reusable, trackable gen AI application using MLRun’s workflow orchestration

See the chatbot in action as it dynamically responds to nuanced banking questions, switching between agents in real-time. This demo showcases how to move beyond experimentation and build robust, traceable, and scalable gen AI systems that deliver real value.